Linear Algebra and Algorithms

For a comprehensive subject on linear algebra the reader is referred to the book [Lang(1987)].

Linear Algebra

Basic terms and definitions

A vector is a one-dimensional array of scalars, i.e. real or complex numbers. We will denote a vector by lowercase bold letters, like e.g.  . In linear algebra two forms of vectors are distinguished: row vector and column vector. For example

. In linear algebra two forms of vectors are distinguished: row vector and column vector. For example

is a row vector and

is a column vector.

An  matrix is a two-dimensional array of scalars having

matrix is a two-dimensional array of scalars having  rows an

rows an  columns. The scalar elements of matrix can be real or complex numbers. We consider only real matrices, i.e. matrices with real elements. Matrices will be denoted by uppercase bold letters. For example matrix

columns. The scalar elements of matrix can be real or complex numbers. We consider only real matrices, i.e. matrices with real elements. Matrices will be denoted by uppercase bold letters. For example matrix

is a  matrix. Matrices can be seen as generalization of vectors, and thus vectors as special cases of matrices, where either the number of columns or rows is one. So the

matrix. Matrices can be seen as generalization of vectors, and thus vectors as special cases of matrices, where either the number of columns or rows is one. So the  -element row vector is an

-element row vector is an  matrix and the

matrix and the  -element column vector is an

-element column vector is an  matrix.

matrix.

A square matrix has the same number of rows and columns, i.e. is of type  , and

, and  is called as the order of the square matrix. An example of a

is called as the order of the square matrix. An example of a  square matrix

square matrix  is given below.

is given below.

A diagonal matrix is a special square matrix, in which only the diagonal elements can differ from  . An example for the diagonal matrix

. An example for the diagonal matrix  is given as

is given as

The diagonal matrix can be given also by means of a diag() operation by listing only the diagonal elements of the matrix as its arguments. For example the diagonal matrix  can be given on such a way as

can be given on such a way as

The element in the  -th row and

-th row and  -th column of a matrix is referred as the

-th column of a matrix is referred as the  -th element of that matrix and is denoted by

-th element of that matrix and is denoted by  . It is usual to construct a matrix by the help of the group operator

. It is usual to construct a matrix by the help of the group operator ![{\textstyle [\ ]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd5b89a327df19fafa2e0a27ef0887d769ed5306) . If

. If  denotes a defining formula of double indexed scalars for some range of indices

denotes a defining formula of double indexed scalars for some range of indices  and

and  then matrix

then matrix  can be given by

can be given by

![{\displaystyle {\bf {A}}=[\ a_{ij}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/59d5a09497c72e293bdef864a49c188dc7850c6c)

which means matrix  is composed as grouping the scalars

is composed as grouping the scalars  by two dimensions, where

by two dimensions, where  describes the row index and

describes the row index and  the column index. Therefore the

the column index. Therefore the  -th element of matrix

-th element of matrix  is set to

is set to  for every values of

for every values of  and

and  in their given ranges. For example if the double indexed scalars

in their given ranges. For example if the double indexed scalars  are defined as

are defined as

then defining matrix  as

as

![{\displaystyle {\bf {I}}=[\ z_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/b7b8a83ccc737859e53e4f93122ffc4809d98401)

leads to

Matrix  is called

is called  identity matrix. The

identity matrix. The  identity matrix for any

identity matrix for any  is characterized by having the value

is characterized by having the value  in its every diagonal positions and all its other elements are

in its every diagonal positions and all its other elements are  . Therefore the identity matrix is a special diagonal matrix. Let

. Therefore the identity matrix is a special diagonal matrix. Let  stands for the column vector having the value

stands for the column vector having the value  on its

on its  -th position, while its every other elements are

-th position, while its every other elements are  . In an

. In an  -dimensional Euclidean space the

-dimensional Euclidean space the  vector

vector  for

for  represents the unit vector in the

represents the unit vector in the  -th dimension. For example for

-th dimension. For example for  the unit vectors

the unit vectors  are given as

are given as

Then the  identity matrix can be also expressed as row vector of the unit vectors as

identity matrix can be also expressed as row vector of the unit vectors as

Elementary matrix operations

An elementary univariate operation on matrices is the transpose operation. The transpose of matrix  is defined by exchanging its

is defined by exchanging its  -th element by its

-th element by its  -th element for each pair of

-th element for each pair of  and

and  in their given ranges. The transpose of a matrix is called transposed matrix and transpose of a given matrix

in their given ranges. The transpose of a matrix is called transposed matrix and transpose of a given matrix  is denoted by

is denoted by  . For example the transpose of the above defined matrix

. For example the transpose of the above defined matrix  is given by

is given by

The transpose of the  matrix is an

matrix is an  matrix and thus the transpose operation changes the dimensionality of the matrix for

matrix and thus the transpose operation changes the dimensionality of the matrix for  . The transpose of square matrix remains a square matrix. The transpose of the

. The transpose of square matrix remains a square matrix. The transpose of the  row matrix is an

row matrix is an  column matrix and vice versa.

column matrix and vice versa.

The multiplication of matrix by a scalar is defined elementwise, i.e.by multiplying each element of the matrix by the given scalar. For example matrix  multiplied by

multiplied by  gives

gives

Two matrix can be added if they are of same type, i.e. both are  . Similarly a matrix can be substracted form another one if they are of same type. For example if

. Similarly a matrix can be substracted form another one if they are of same type. For example if  matrix

matrix  is given as

is given as

then the matrix  as the sum

as the sum  are given by

are given by

Two matrix can be multiplied if the number of columns of the first matrix and the number of rows of the second one are the same. The multiplication of the  matrix

matrix  by the

by the  matrix

matrix  , gives an

, gives an  times product matrix

times product matrix  . Let the

. Let the  -th,

-th,  -th and

-th and  -th elements of matrix

-th elements of matrix  ,

,  and

and  be

be  ,

,  and

and  , respectively. Then the matrix multiplication

, respectively. Then the matrix multiplication

is defined by the elements of  ,

,  and

and  as

as

Hence the  -th element of the product matrix

-th element of the product matrix  is the (scalar) product of the

is the (scalar) product of the  -th row of matrix

-th row of matrix  and the

and the  -th column of matrix

-th column of matrix  both consisting of

both consisting of  elements. In order to illustrate the matrix multiplication we define the

elements. In order to illustrate the matrix multiplication we define the  times matrix

times matrix  as

as

Then the matrix product  results in a

results in a  times matrix

times matrix  , which is given by

, which is given by

Let  stands for the column vector having every element set to value

stands for the column vector having every element set to value  . It is called unit vector. Multiplying a matrix from right by

. It is called unit vector. Multiplying a matrix from right by  gives the row sums of that matrix in a column vector form. For example

gives the row sums of that matrix in a column vector form. For example

gives the row sum of matrix

.

The addition of matrices is commutative and associative, i.e. the following relations hold for any  matrices

matrices  ,

,  and

and  :

:

The matrix multiplication is distributive with respect to the matrix addition and matrix multiplication is associative, i.e. the following relations hold for any  matrix

matrix  ,

,  matrices

matrices  and

and  and

and  matrix

matrix  :

:

However matrix multiplication is in general, except from some special cases, NOT commutative, i.e. for  matrices

matrices  and

and

Therefore multiplication/product of matrix  and

and  is not specified. Instead one should speak about multiplying matrix

is not specified. Instead one should speak about multiplying matrix  by

by  from right (by default) or from left.

from right (by default) or from left.

The special classes of matrices, for which commutativity holds includes

- multiplication by identity matrix

:

:  ,

,

- multiplication of diagonal matrices

and

and  with each other:

with each other:  ,

,

- multiplication of two powers of the same matrix

:

:  and

and

- multiplication of two polynomials of the same matrix

,

,  and

and  :

:  , which follows from the commutativity of two powers of the same matrix by repetitive application of distributivity of the matrix multiplication.

, which follows from the commutativity of two powers of the same matrix by repetitive application of distributivity of the matrix multiplication.

Further useful relations are

The major difference of the elementary matrix operations comparing to their scalar counterparts is that matrix multiplication is not commutative in general. This has far-reaching consequences for the matrix theory.

Linear independence of vectors

The expression

is called as a linear combination of the vectors  and the scalars

and the scalars  are weights. Linear, since the used operations

are weights. Linear, since the used operations  and constant multiplication are linear.

and constant multiplication are linear.

For example each vector  pointing to a point in the

pointing to a point in the  -dimensional Euclidean space can be given as a linear combinations of the vectors

-dimensional Euclidean space can be given as a linear combinations of the vectors  ,

,  and

and  as

as

We say that the vectors  ,

,  and

and  span the

span the  -dimensional Euclidean space and hence generate the whole

-dimensional Euclidean space and hence generate the whole  -dimensional space. There are infinite many other vector combinations, which span the

-dimensional space. There are infinite many other vector combinations, which span the  -dimensional Euclidean space, the vector are not necessarily be perpendicular to each other, like e.g.

-dimensional Euclidean space, the vector are not necessarily be perpendicular to each other, like e.g.  ,

,  and

and  .

.

However if we consider the vectors  ,

,  and

and  then we can only cover the vectors at the plane with

then we can only cover the vectors at the plane with  by the linear combinations of

by the linear combinations of  ,

,  and

and  . In this case we say that the vectors

. In this case we say that the vectors  ,

,  and

and  generate (or span) only a (

generate (or span) only a ( -dimensional) sub-space of the whole

-dimensional) sub-space of the whole  -dimensional Euclidean space. This is because the 3rd vector

-dimensional Euclidean space. This is because the 3rd vector  can be given as the linear combination of the other two as

can be given as the linear combination of the other two as

This phenomena is characterised by saying that the vectors  ,

,  and

and  are not linear independent of each other. In fact not only

are not linear independent of each other. In fact not only  can be given as linear combination of the other two, but any of the three vectors can be given as a linear combination of the other two. The vectors

can be given as linear combination of the other two, but any of the three vectors can be given as a linear combination of the other two. The vectors  ,

,  and

and  are linear dependent.

are linear dependent.

However the  ,

,  and

and  behave on another way, none of them can be given as linear combination of the other two. We say the vectors

behave on another way, none of them can be given as linear combination of the other two. We say the vectors  ,

,  and

and  are linear independent. Among the vectors

are linear independent. Among the vectors  ,

,  and

and  only any two of them are linear independent of each other.

only any two of them are linear independent of each other.

It can be seen that a given  number of

number of  -dimensional vectors determine the whole

-dimensional vectors determine the whole  -dimensional space if and only if they are linear independent. If they are linear dependent and only

-dimensional space if and only if they are linear independent. If they are linear dependent and only  of them are linear independent, then they generate (or span) only a

of them are linear independent, then they generate (or span) only a  -dimensional sub-space of the whole

-dimensional sub-space of the whole  -dimensional space.

-dimensional space.

If the  vectors are collected in a matrix, for example each vector put in a different row of the matrix, then the maximum number number of linear independent row vectors of the matrix is called as the rank of that matrix. The vectors can be put also in the different columns of a matrix, in which case the the maximum number of linear independent column vectors of the matrix is the rank of that matrix. It can be seen that the maximum number of linear independent rows and the maximum number of linear independent columns of a matrix are the same, so each matrix has a unique rank. The rank of the matrix

vectors are collected in a matrix, for example each vector put in a different row of the matrix, then the maximum number number of linear independent row vectors of the matrix is called as the rank of that matrix. The vectors can be put also in the different columns of a matrix, in which case the the maximum number of linear independent column vectors of the matrix is the rank of that matrix. It can be seen that the maximum number of linear independent rows and the maximum number of linear independent columns of a matrix are the same, so each matrix has a unique rank. The rank of the matrix  is denoted by

is denoted by  . Based on the above the rank of a matrix is the dimension of the subspace generated by its columns or rows as vectors.

. Based on the above the rank of a matrix is the dimension of the subspace generated by its columns or rows as vectors.

It follows that the the statements below hold for the rank of a matrix.

- The rank of an

matrix

matrix  is at most the smaller of

is at most the smaller of  and

and  , in other words

, in other words

- The rank of the

square matrix

square matrix  is at most its order, in other words

is at most its order, in other words

Determinant

The determinant is a scalar assigned to a square matrix. It depends on every elements of the matrix, hence determinant is a scalar function of the square matrix. The determinant of the  matrix

matrix  is denoted as

is denoted as  (or

(or  ) and

) and  .

.

Expression of determinant - Leibniz formula

Expression of determinant - Leibniz formula

The determinant is a sum of signed products, where each product is composed as a multiplication of elements taken from each row of the square matrix, each of them at different column positions and every such products are included in the sum. In other words the determinant of  matrix

matrix ![{\textstyle {\bf {A}}=[\ a_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5b21c5e2d0f6665843c07a9a31ad40d75d2706a) is given by

is given by

where  is a permutation of the column indices

is a permutation of the column indices  and the sign function

and the sign function  assigns

assigns  to a permutation

to a permutation  if it can be created by even number of exchanges of two numbers starting from

if it can be created by even number of exchanges of two numbers starting from  and otherwise it gives

and otherwise it gives  . The number of products in the sum equals to the number of possible permutations, which is

. The number of products in the sum equals to the number of possible permutations, which is  . This expression of the determinant is called Leibniz formula.

. This expression of the determinant is called Leibniz formula.

Determinant of a

Determinant of a  matrix

matrix

Let the  matrix

matrix  given as

given as

For  there are only two permutations, therefore the expression of

there are only two permutations, therefore the expression of  can be given as

can be given as

Determinant of a

Determinant of a  matrix - Sarrus rule

matrix - Sarrus rule

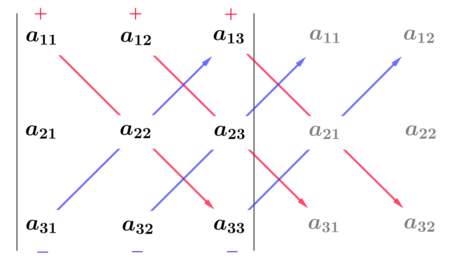

Let the  matrix

matrix  given as

given as ![{\textstyle {\bf {A}}=[\ a_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5b21c5e2d0f6665843c07a9a31ad40d75d2706a) , in other words

, in other words

For  there are

there are  permutations, three of them are encountered with sign

permutations, three of them are encountered with sign  and the other three with

and the other three with  . The expression of

. The expression of  can be given as

can be given as

This expression can be easily memorized by the help of the Sarrus rule, see in Figure 12. Copying the first two columns of the matrix right to it, the products with positive sign can be obtained along the diagonals from top to down and right, while the products with negative sign are the ones from down to top and right.

Unfortunately the schema of Sarrus can not be generalized for higher dimensions.

Geometric interpretation

Geometric interpretation

The columns of the  matrix

matrix  can be interpreted as vectors in the

can be interpreted as vectors in the  -dimensional Euclidean space. For

-dimensional Euclidean space. For  these vectors span a parallelogram, and the determinant is exactly the signed value of the area of this parallelogram. This is shown in Figure 13.

these vectors span a parallelogram, and the determinant is exactly the signed value of the area of this parallelogram. This is shown in Figure 13.

For  the column vectors of

the column vectors of  , denoted by

, denoted by  ,

,  and

and  , form a parallelepiped and the determinant gives the signed volume of this parallelepiped, see in Figure 14.

, form a parallelepiped and the determinant gives the signed volume of this parallelepiped, see in Figure 14.

This interpretation holds also for higher dimensions. So the column vectors of  matrix

matrix  span a parallelotope in the

span a parallelotope in the  -dimensional space and the determinant of

-dimensional space and the determinant of  gives its signed

gives its signed  -dimensional volume.

-dimensional volume.

Properties of determinant

Properties of determinant

Determinant of several special matrices

- Determinant of identity matrix

is

is  .

.

- Determinant of diagonal matrix

is

is  .

.

The determinant of  matrix

matrix  has the following properties regarding row and column manipulations.

has the following properties regarding row and column manipulations.

- Multiplying matrix

by constant

by constant  results in multiplication of the determinant by

results in multiplication of the determinant by  , in other words

, in other words

- Multiplying any row or any column of

by constant

by constant  leads to a multiplication of the determinant of

leads to a multiplication of the determinant of  by

by  . In other words the determinant of the modified matrix is

. In other words the determinant of the modified matrix is  .

.

- Exchanging two rows or two columns of

leads to a multiplication of the determinant by

leads to a multiplication of the determinant by  .

.

- Adding a scalar multiplication of another row to a row of

or adding a scalar multiplication of another column to a column of

or adding a scalar multiplication of another column to a column of  does not change the value of the determinant.

does not change the value of the determinant.

Further useful properties of the determinant are

Laplace expansion

Laplace expansion

The minor  of of the

of of the  matrix

matrix ![{\textstyle {\bf {A}}=[\ a_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5b21c5e2d0f6665843c07a9a31ad40d75d2706a) is defined as the determinant of the

is defined as the determinant of the  submatrix of

submatrix of  , which is obtained by omitting the

, which is obtained by omitting the  -th row and

-th row and  -th column of the matrix

-th column of the matrix  , for

, for  . The determinant of the matrix

. The determinant of the matrix  can be expressed by expanding it along its

can be expressed by expanding it along its  -th row. This gives a sum of signed product of each element of the

-th row. This gives a sum of signed product of each element of the  -th row by its corresponding minor. In other words

-th row by its corresponding minor. In other words

This is called as Laplace expansion along the

-th row of matrix

.

As an example we show the Laplace expansion of the  -dimensional square matrix

-dimensional square matrix  with general notations along its 1st row, which leads to

with general notations along its 1st row, which leads to

The Laplace expansion along the

-th column of matrix

can be also defined on similar way.

Determinant and linear independence

Determinant and linear independence

One of the most important use of the determinant is its relation to linear independence. The determinant of the  matrix

matrix  is non-zero if and only if the rows (and columns) of matrix

is non-zero if and only if the rows (and columns) of matrix  are linear independent.

are linear independent.

This can be seen e.g. by the help of the geometric interpretation of the determinant. If the column vectors of matrix  are linear independent then they generate the whole

are linear independent then they generate the whole  -dimensional space and thus the

-dimensional space and thus the  -volume of the parallelotope spanned by them must be non-zero and therefore also the determinant is non-zero. However if the column vectors of matrix

-volume of the parallelotope spanned by them must be non-zero and therefore also the determinant is non-zero. However if the column vectors of matrix  are linear dependent then

are linear dependent then  column vectors of matrix

column vectors of matrix  generate only a

generate only a  -dimensional subspace of the whole

-dimensional subspace of the whole  -dimensional space, which implies that the

-dimensional space, which implies that the  -volume of the parallelotope spanned by them must be zero and hence also the determinant is zero.

-volume of the parallelotope spanned by them must be zero and hence also the determinant is zero.

Inverse matrix

We consider the question whether a matrix does exist, which multiplied by the square matrix  results in the identity matrix. It would be an analogue of the reciprocal number in the set of real (or complex) numbers. Immediately the following questions arise

results in the identity matrix. It would be an analogue of the reciprocal number in the set of real (or complex) numbers. Immediately the following questions arise

- What is the condition of the existence of an such matrix ? Not every number has reciprocal also in the set of real numbers (i.e. the number zero).

- If such matrices exist for multiplying by

both from left and right, then they are the same ?, This question is due to the general non-commutativity of the matrices.

both from left and right, then they are the same ?, This question is due to the general non-commutativity of the matrices.

The adjugate matrix

The adjugate matrix

The adjugate matrix of the  matrix

matrix  is denoted by

is denoted by  , and it is defined as the transpose of the matrix of the signed minors. More precisely

, and it is defined as the transpose of the matrix of the signed minors. More precisely  is given by its elements as

is given by its elements as

The importance of the adjugate matrix lies in the following relation, which holds for every square matrix  :

:

The inverse matrix

The inverse matrix

The  matrix

matrix  is an invertable matrix if there exist a matrix, which is multiplied by

is an invertable matrix if there exist a matrix, which is multiplied by  from left gives the identity matrix and multiplied by

from left gives the identity matrix and multiplied by  from right also gives the identity matrix. This matrix is called the inverse matrix of

from right also gives the identity matrix. This matrix is called the inverse matrix of  and denoted by

and denoted by  . Thus for the inverse matrix

. Thus for the inverse matrix  holds the following defining relation:

holds the following defining relation:

Dividing the above relation for  by

by  , it leads to expression of the inverse matrix

, it leads to expression of the inverse matrix  as

as

Since the adjugate matrix always exists, the inverse matrix  exists if and only the determinant of

exists if and only the determinant of  is non-zero. The square matrix which is not invertable is also called as singular matrix. Similarly the invertable matrix is also called as non-singular. Therefore

is non-zero. The square matrix which is not invertable is also called as singular matrix. Similarly the invertable matrix is also called as non-singular. Therefore

- The square matrix is singular if and only if its determinant is zero.

- The square matrix is non-singular if and only if its determinant is non-zero.

Inverse of a

Inverse of a  matrix

matrix

Let the  matrix

matrix  given as

given as

The determinant of  can be given as

can be given as  Due to

Due to  all the minors are scalars. Hence the inverse matrix

all the minors are scalars. Hence the inverse matrix  can be expressed as

can be expressed as

This can be checked by multiplying  by

by

which is  as expected. It is easy to remember the nominator of the inverse of

as expected. It is easy to remember the nominator of the inverse of  matrix: the values in the main diagonal (a and d) are exchanged and the values in the secondary diagonal (b and c) are multiplied by

matrix: the values in the main diagonal (a and d) are exchanged and the values in the secondary diagonal (b and c) are multiplied by  .

.

Inverse of a

Inverse of a  matrix

matrix

Let the  matrix

matrix  given as

given as ![{\textstyle {\bf {A}}=[\ a_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5b21c5e2d0f6665843c07a9a31ad40d75d2706a) , in other words

, in other words

Due to  all the minors are determinants of

all the minors are determinants of  -dimensional matrix. Hence the inverse matrix

-dimensional matrix. Hence the inverse matrix  can be expressed as

can be expressed as

Properties of the inverse matrix

Properties of the inverse matrix

Inverse matrix of several special matrices

- Inverse matrix of identity matrix is itself.

is

is  .

.

- Inverse matrix of diagonal matrix is also diagonal.

,

,

:

:

.

.

Further useful properties of the inverse matrix are

Linear systems of equations

The linear system of equations consisting of  unknowns

unknowns  and

and  equations can be given as

equations can be given as

This can be also called as

linear system of equations. By introducing the

matrix

and the

column vector

and the

column vector

as

![{\displaystyle {\bf {A}}=[\ a_{ij}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/59d5a09497c72e293bdef864a49c188dc7850c6c)

the system of linear equations can be rewritten in matrix vector form as

Here

is the coefficient matrix,

is a column vector of unknowns or unknown column vector and

is given column vector.

Reformulation - linear combination of column vectors of matrix

Reformulation - linear combination of column vectors of matrix

The above system of linear equations can be rewritten by the help of the group operator ![{\textstyle [\ ]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd5b89a327df19fafa2e0a27ef0887d769ed5306) on index

on index  as

as

![{\displaystyle \ =[\ b_{i}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/24c1427c1ec7c4723e0e65d447d0b568448e86e4)

where ![{\textstyle [\ b_{i}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd0e5fcaa20f7c5ff5117682f1836d0300a26145) is a vector

is a vector  due to the application of the group operator

due to the application of the group operator ![{\textstyle [\ ]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd5b89a327df19fafa2e0a27ef0887d769ed5306) . The order of grouping on index

. The order of grouping on index  and summing can be exchanged on the left hand side of the equation, which gives

and summing can be exchanged on the left hand side of the equation, which gives

![{\displaystyle \sum _{j=1}^{n}[\ a_{ij}]\ x_{j}=[\ b_{i}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cba9bcf9f9c99bb3ece189adb0dd05bdcbac3d28)

Introducing the column vectors composed from the columns of  as

as

the relation can be further rearranged as

Based on this formulation, the problem of solving the above  linear system of equations can be seen as finding the weights

linear system of equations can be seen as finding the weights  -s,

-s,  with which the given vector

with which the given vector  can be composed as the linear combination of the

can be composed as the linear combination of the  - dimensional vectors

- dimensional vectors  ,

,  .

.

This interpretation enables to establish criteria for solvability for different cases of the  linear system of equations depending on the relation between

linear system of equations depending on the relation between  and

and  as well as the linear independence of the vectors

as well as the linear independence of the vectors  ,

,  and

and  .

.

Solvability - general case

Solvability - general case

The following statements follow from the above linear combination interpretation for the solvability of the  linear system of equations.

linear system of equations.

- The linear system of equations is solvable if and only if vector

can be composed as linear combination of the vectors

can be composed as linear combination of the vectors  ,

,  , in other words if the vectors

, in other words if the vectors  ,

,  and vector

and vector  are linear dependent. This means that adding vector

are linear dependent. This means that adding vector  to the set of vectors

to the set of vectors  ,

,  does not increase the number of linear independent vectors in the set. This is equivalent to the statement that the extended matrix

does not increase the number of linear independent vectors in the set. This is equivalent to the statement that the extended matrix  obtained as adding column vector

obtained as adding column vector  as

as  column to matrix

column to matrix  , has the same rank as matrix

, has the same rank as matrix  .

.

- If

then two cases must be distinguished according to the relation between

then two cases must be distinguished according to the relation between  and

and  .

.

- If

then the vectors

then the vectors  ,

,  generate the

generate the  -dimensional space, in which the weights of the

-dimensional space, in which the weights of the  -dimensional vector

-dimensional vector  is unique, therefore the system has a unique solution. Note that in this case

is unique, therefore the system has a unique solution. Note that in this case  must be, since

must be, since  can not be greater than

can not be greater than  .

.

- If

then the vectors

then the vectors  ,

,  generate a

generate a  -dimensional space, in which only

-dimensional space, in which only  components of the the

components of the the  -dimensional vector

-dimensional vector  can be composed by linear combination of the the vectors

can be composed by linear combination of the the vectors  ,

,  . Therefore in this case

. Therefore in this case  unknowns can be freely selected and the system has infinite many solutions. Note that in this case

unknowns can be freely selected and the system has infinite many solutions. Note that in this case  , so it can be also smaller than

, so it can be also smaller than  .

.

The different cases of solvability and their criteria are summarized in Table 1.

Solvability - square matrix

Solvability - square matrix

Several further findings can be obtained for the special case of  square matrix

square matrix  . If

. If  then matrix

then matrix  is non-singular and

is non-singular and  . Otherwise matrix

. Otherwise matrix  is singular and

is singular and

Taking all these into account the solvability conditions for the square matrix  can be summarized in a slightly simplified form, which is shown in Table 2.

can be summarized in a slightly simplified form, which is shown in Table 2.

Eigenvectors, eigenvalues, spectral decomposition

For a given  square matrix

square matrix  finding the scalars

finding the scalars  and

and  column vectors

column vectors  satisfying the equation

satisfying the equation

called as eigenvalue problem of matrix  . The scalars

. The scalars  satisfying the equation are called eigenvalues of matrix

satisfying the equation are called eigenvalues of matrix  and the vectors

and the vectors  satisfying the equation are called eigenvectors of matrix

satisfying the equation are called eigenvectors of matrix  . Transforming an eigenvector by multiplying it by

. Transforming an eigenvector by multiplying it by  gives a vector

gives a vector  being parallel to the considered eigenvector. Hence the transformation by

being parallel to the considered eigenvector. Hence the transformation by  does not change the direction of the eigenvectors and their length will be multiplied by the eigenvalue. This explains the names eigenvectors and eigenvalues.

does not change the direction of the eigenvectors and their length will be multiplied by the eigenvalue. This explains the names eigenvectors and eigenvalues.

Characteristic polynomial

Characteristic polynomial

Rearranging the above relation gives

This is homogeneous system of linear equations, which has non-trivial solution only if the determinant of the coefficient matrix  is zero, in other words, if

is zero, in other words, if

Observe that  arises in each element of the main diagonal of matrix

arises in each element of the main diagonal of matrix  . It follows that the permutation giving the products of the main diagonal and therefore also the determinant of this matrix is an

. It follows that the permutation giving the products of the main diagonal and therefore also the determinant of this matrix is an  -order polynomial of

-order polynomial of  . This

. This  -order polynomial of

-order polynomial of  is called the characteristic polynomial of matrix

is called the characteristic polynomial of matrix  . Its solutions give the eigenvalues. According to the fundamental theory of algebra, an

. Its solutions give the eigenvalues. According to the fundamental theory of algebra, an  -order polynomial has exactly

-order polynomial has exactly  solutions in the set of complex numbers. It follows that there exist

solutions in the set of complex numbers. It follows that there exist  complex eigenvalues implying at most

complex eigenvalues implying at most  real eigenvalues, from which some of them can arise more times.

real eigenvalues, from which some of them can arise more times.

Algebraic multiplicity and geometric multiplicity

Algebraic multiplicity and geometric multiplicity

Let  ,

,  ,

,  denote the eigenvalues of matrix

denote the eigenvalues of matrix  . The number of arising of the eigenvalue

. The number of arising of the eigenvalue  in the solution of the characteristic polynomial is called the algebraic multiplicity

in the solution of the characteristic polynomial is called the algebraic multiplicity  . For each

. For each  the homogeneous system

the homogeneous system

determines the eigenvectors belonging to the eigenvalue

. Due to the homogeneous character of the system, its solution must have at least one free parameter. This is manifested in the fact that any constant multiplication of an eigenvector is also a solution of a system. Hence an eigenvector is determined only up to a constant multiplication, i.e. only the direction (in the

-dimensional Euclidean system) of the eigenvector is determined, but its length not. The number of linearly independent eigenvectors belonging to the eigenvalue

can be one or more depending on the rank of matrix

. In fact linearly independent eigenvectors belonging to the eigenvalue

is the number of freely selectable parameter, which is

, which is called the geometric multiplicity of

.

An example for determining eigenvalues an eigenvectors

An example for determining eigenvalues an eigenvectors

Matrix  is given as

is given as

- Computing the eigenvalues of the matrix

- Determining the eigenvectors of the matrix

- The eigenvector belonging to

- The eigenvector corresponding to

or

or  , since the eigenvectors are only determined up to a multiplicative constant.

, since the eigenvectors are only determined up to a multiplicative constant.

Left and right eigenvalues and eigenvectors

Left and right eigenvalues and eigenvectors

So far we considered the form of the eigenvalue problem, in which the vector  being a column vector and hence locates on the right side of matrix

being a column vector and hence locates on the right side of matrix  . From this reason this eigenvalue problem is also called as right eigenvalue problem, and the

. From this reason this eigenvalue problem is also called as right eigenvalue problem, and the  -s and

-s and  -s are also called as right eigenvalues and right eigenvectors of matrix

-s are also called as right eigenvalues and right eigenvectors of matrix  , respectively.

, respectively.

There is a similar eigenvalue problem, in which the vector  is a row vector and arises on the left hand side of the matrix

is a row vector and arises on the left hand side of the matrix  as

as

and it called as left eigenvalue problem. The solution  -s and

-s and  -s are also called as left eigenvalues and left eigenvectors of matrix

-s are also called as left eigenvalues and left eigenvectors of matrix  , respectively.

, respectively.

The two kind of eigenvalue problem can be related by transposing them into each other. For example transposing the right eigenvalue problem gives

This shows the relation between the two kind of eigenvalue problem, which can be formulated as follows. The right side eigenvalues and eigenvectors of square matrix  are the left side eigenvalues and eigenvectors of the transpose of matrix

are the left side eigenvalues and eigenvectors of the transpose of matrix  , respectively. It follows that for a symmetric matrix

, respectively. It follows that for a symmetric matrix  the right and the left side eigenvalues and eigenvectors are the same, respectively, due to

the right and the left side eigenvalues and eigenvectors are the same, respectively, due to  S

S .

.

Properties of eigenvalues

Properties of eigenvalues

Let  and

and  stands for the eigenvalues and the

stands for the eigenvalues and the  -th eigenvalue,

-th eigenvalue,  of

of  matrix

matrix  , respectively. Useful properties of the eigenvalues are given as

, respectively. Useful properties of the eigenvalues are given as

Spectral decomposition

Spectral decomposition

The spectral decomposition of  square matrix

square matrix  is a factorization into the canonical form as

is a factorization into the canonical form as

where  is a diagonal matrix. The spectral decomposition is also called eigendecomposition or diagonalisation.

is a diagonal matrix. The spectral decomposition is also called eigendecomposition or diagonalisation.

The matrices arising in the factorization can be interpreted as follows. The diagonal elements of the diagonal matrix  are the right eigenvalues of matrix

are the right eigenvalues of matrix  . Note that each eigenvalue arises in the diagonal as many times as its algebraic multiplicity. The column vectors of matrix

. Note that each eigenvalue arises in the diagonal as many times as its algebraic multiplicity. The column vectors of matrix  are the right eigenvectors

are the right eigenvectors  belonging to the

belonging to the  eigenvalues,

eigenvalues,  . Thus

. Thus  and

and  satisfies

satisfies

which can be arranged in matrix form as

Note that the fact that  is determined only up to a constant, does not influence the canonical form of the spectral decomposition. This can be seen by replacing

is determined only up to a constant, does not influence the canonical form of the spectral decomposition. This can be seen by replacing  by

by  , in matrix form

, in matrix form  by

by  with

with  , in the canonical form, which leads to

, in the canonical form, which leads to

where we utilized the commutativity of the diagonal matrices  .

.

An example for spectral decomposition

An example for spectral decomposition

Continuing the the example for determining eigenvalues an eigenvectors of the matrix

now we show the spectral decomposition of matrix

.

So far we have determined the right eigenvalues  and

and  , and the right eigenvectors

, and the right eigenvectors  and

and  belonging to them.

belonging to them.

Composition of diagonal matrix  The diagonal elements of matrix

The diagonal elements of matrix  are the eigenvalues

are the eigenvalues  and

and  . Thus matrix

. Thus matrix  is given by

is given by

Composition of matrix  The columns of matrix

The columns of matrix  are the eigenvectors

are the eigenvectors  and

and  . Thus matrix

. Thus matrix  is given as

is given as

Determination of the inverse of matrix

The spectral decomposition of matrix

Conditions for the existence of spectral decomposition

Conditions for the existence of spectral decomposition

Note that not every square matrix is diagonalisable. Two useful conditions for  matrix

matrix  to be diagonalisable can be given as

to be diagonalisable can be given as

- The spectral decomposition of matrix

exists if and only if the number of linearly independent right eigenvectors of

exists if and only if the number of linearly independent right eigenvectors of  equals to

equals to  .

.

- A sufficient condition for the existence of spectral decomposition of matrix

is that its characteristic polynomial has no repeated roots.

is that its characteristic polynomial has no repeated roots.

Based on the first condition, the canonical form of the spectral decomposition can be derived. The column vectors of  are linear independent, so it is non-singular and hence exists the inverse matrix

are linear independent, so it is non-singular and hence exists the inverse matrix  . Multiplying the matrix form relation

. Multiplying the matrix form relation  by

by  from right gives

from right gives

from which

falls out resulting the canonical form of the spectral decomposition.

Application of spectral decomposition

Application of spectral decomposition

Spectral decomposition is used im many area of the natural sciences. Below we list several typical example for using spectral decomposition.

- Calculating a power of matrix

- Expressing the inverse of matrix

exists if and only if

exists if and only if  for every

for every  .

.

- Expressing matrix function

of matrix

of matrix  .

.

Here  defined by the help of power series of f()

defined by the help of power series of f()

- Showing the convergence of

Matrix norms

Eigenvalues can be considered to be a set of numbers characterizing the magnitude of a square matrix. Often the eigenvalue with the highest magnitude has outstanding importance. Besides of it, there is a need for characterising the magnitude of a square matrix only by one number, analogously to the absolute value of a real or complex number.

The matrix norm is a measure characterizing the magnitude of a square matrix by one number. The matrix norm is denoted by  , e.g. the norm of the square matrix

, e.g. the norm of the square matrix  is

is  .

.

Definition of several matrix norms

Definition of several matrix norms

On the contrary to the uniqueness of the absolute value, more matrix norms can be defined. Let  be the

be the  -th element of the

-th element of the  square matrix

square matrix  . Then several martix norms can be given as

. Then several martix norms can be given as

- Max norm

- Max row sum

norm

norm

General properties of matrix norms

General properties of matrix norms

Matrix norms are useful tools e.g. in theoretical derivations, like e.g. to prove the existence of an upper limit. In fact in most of the cases the concrete form of the matrix norm is not interesting. Instead some properties of the matrix norm are utilized. These important general properties of matrix norm are listed for  square matrices

square matrices  and

and  as

as

Useful matrix norms also have the following additional property

.

All the three above defined matrix norms have this additional property. Let  and

and  be the

be the  -th and

-th and  -th element of the

-th element of the  square matrix

square matrix  and

and  , respectively . Then the additional property can be shown for these matrix norms as follows.

, respectively . Then the additional property can be shown for these matrix norms as follows.

- Max norm

- Max row sum

norm

norm

Several typical applications of matrix norms are given below.

- To show that a norm of a matrix expression is upper limited.

- To show that

, when

, when  .

.

- To show that

convergent is, when

convergent is, when  .

.

Application of linear algebra

Solving system of equations

System of linear equations

System of linear equations

Let us consider the system of linear equations with  unknowns and

unknowns and  equations, i.e. with

equations, i.e. with  square matrix

square matrix  . This system of linear equations can be written in matrix vector form as

. This system of linear equations can be written in matrix vector form as

Inhomogeneous system with

Inhomogeneous system with

Let us consider the case with  , which is an important subclass of system of linear equations. Then there is always a unique solution, since the rank can not be changed via extending

, which is an important subclass of system of linear equations. Then there is always a unique solution, since the rank can not be changed via extending  to

to  , due to

, due to  . The solution can be given in closed form by multiplying the matrix vector equation by

. The solution can be given in closed form by multiplying the matrix vector equation by  from left, which gives the closed form solution as

from left, which gives the closed form solution as

Note that the inverse matrix exists in this case, since matrix  is non-singular due to

is non-singular due to  .

.

Homogeneous system with

Homogeneous system with

Recall that the equation

holds for the matrix

. If

then this leads to

Let  denotes the

denotes the  -th column vector of

-th column vector of  ,

,  . It follows that any column vector

. It follows that any column vector  is a solution of the homogeneous equation

is a solution of the homogeneous equation  with

with  . If additionally

. If additionally  , then there is only one free parameter. Since any constant multiplication of a column vector

, then there is only one free parameter. Since any constant multiplication of a column vector  is also a solution, it follows that

is also a solution, it follows that

,

,  gives every solution of the homogeneous equation

gives every solution of the homogeneous equation  with

with  and

and- the columns of

are constant multiplications of each other if

are constant multiplications of each other if  .

.

System of non-linear equations

System of non-linear equations

Let us consider the following system of non-linear equations with  unknowns and

unknowns and  equations:

equations:

Introducing the notations

this can be rewritten as

Solving of this system of non-linear equations is equivalent with minimization problem

since the square of each element in  is non-negative. The gradient of

is non-negative. The gradient of  can be expressed as

can be expressed as

where  is the Jacobian matrix of

is the Jacobian matrix of  . Then the minimization problem can be solved numerically by applying the gradient descent algorithm. This can be executed by initializing

. Then the minimization problem can be solved numerically by applying the gradient descent algorithm. This can be executed by initializing  as

as

and performing the iterative steps

where  is the step size and can be determined by any of the line search methods (see in 3.2.3).

is the step size and can be determined by any of the line search methods (see in 3.2.3).

Numeric optimization

Gradient methods

The gradient methods are numeric methods for unconstrained mathematical optimization. They can be applied to differentiable multivariate functions. They are usually formulated as finding the minimum of a multivariate function. All of them use the gradient of the multivariate function, this explains the name.

The basic idea of gradient methods is to decrease the function value in each step of the algorithm. It is well known that at the given point  of the multivariate function,

of the multivariate function,  x

x , the infinitesimal difference in the function value is the highest in the direction of the gradient at that point,

, the infinitesimal difference in the function value is the highest in the direction of the gradient at that point,  . Therefore the steepest decent is in the opposite direction of the gradient. The iterative step of any gradient methods takes a step in a direction

. Therefore the steepest decent is in the opposite direction of the gradient. The iterative step of any gradient methods takes a step in a direction  which is related to the negative gradient at the current point,

which is related to the negative gradient at the current point,  . The step size is denoted by

. The step size is denoted by  . Hence the iterative step of any gradient methods can be given as

. Hence the iterative step of any gradient methods can be given as

Note that both the step size  and the vector

and the vector  can depend on the iteration step.

can depend on the iteration step.

Requirements and conditions to decrease the function value in each iteration

Requirements and conditions to decrease the function value in each iteration

In order to decrease the function value in each step, two requirements must be made.

It must be ensured that the

It must be ensured that the  infinitesimally shows into a descent direction.

infinitesimally shows into a descent direction. The step size

The step size  must be enough small to avoid overshooting the nearest local minimum and thus to avoid leading to divergence.

must be enough small to avoid overshooting the nearest local minimum and thus to avoid leading to divergence.

The first requirement is equivalent with showing that the negative gradient  and

and  and vector

and vector  lie on the same side of the hyperplane being perpendicular to the gradient. In this case their scalar product is positive. This leads to the condition

lie on the same side of the hyperplane being perpendicular to the gradient. In this case their scalar product is positive. This leads to the condition  ensuring

ensuring  as

as

The second requirement can be fulfilled by formalising "enough small" by specifying a demanded amount of decrease in function value. This is done by the Armijo condition demanding that the decrease in function value should be at least proportional to the product of the step size  and the directional derivative

and the directional derivative  . Hence the

. Hence the  can be fulfilled by condition

can be fulfilled by condition  , which is the Armijo condition and given as

, which is the Armijo condition and given as

where  is the constant of proportionality. In the practice usually

is the constant of proportionality. In the practice usually  is set to small values, like e.g.

is set to small values, like e.g.  . Observe that on both sides of this inequality there are negative values.

. Observe that on both sides of this inequality there are negative values.

Determination of the step size

Determination of the step size

If the step size were set to too small then the convergence would become too slow. This leads to a third requirement as

The step size

The step size  must be enough large to avoid slowing the convergence. The requirements

must be enough large to avoid slowing the convergence. The requirements  and

and  together ensure an optimal step size.

together ensure an optimal step size.

The three most important search methods for determining the optimal step size are

- Exact line search

- Inexact line search

- Backtracing line search

The exact line search determines the optimal step size  by minimizing the function value after taking the next step. This leads to a one-dimensional minimization problem as

by minimizing the function value after taking the next step. This leads to a one-dimensional minimization problem as

The exact line search are used only seldom in practice due to its high computing effort.

In the inexact line search the step size, starting from an initial value, like e.g.  , is iteratively decreased until the function value after the step will be less than the current one, in other words until

, is iteratively decreased until the function value after the step will be less than the current one, in other words until

The backtracing line search searches for "enough small" step size by utilizing the Armijo condition. Starting from an initial step size, the step size is iteratively multiplied by a properly selected constant of proportionality,  until the Armijo condition fulfils.

until the Armijo condition fulfils.

While exact line search fulfils both requirements  and

and  , inexact line search fulfils only

, inexact line search fulfils only  and backtracing line search lies somewhere in between. Because of this and its simplicity, it is often used in the practice.

and backtracing line search lies somewhere in between. Because of this and its simplicity, it is often used in the practice.

The pseudo-code of an algorithm for gradient method with backtracing line search can be seen in Algorithm 9.

Algorithm 9 Gradient method - with backtracing line search

—————————————————————————————

Inputs: - multivariate function

- initial value

- precision value

Outputs:

- found local minimum place

- local minimum

—————————————————————————————

1 Initialisation  ,

,

2 while

3

4 Compute gradient

5 Compute vector

—– Backtracing line search - begin —

6 Init backtracing line search  , set

, set

7 while

8

9 end

—– Backtracing line search - end —-

10 Update  as

as

11 end

12 return  and

and

—————————————————————————————

The gradient descent

The gradient descent

The gradient descent algorithm is a special case of the gradient methods by setting  to be the steepest descent, in other words

to be the steepest descent, in other words  . Thus gradient descent is a first-order method and its iterative step can be given as

. Thus gradient descent is a first-order method and its iterative step can be given as

In the context of deep learning the step size  is also called as learning rate. The pseudo-code of the gradient descent algorithm is given in Algorithm 10.

is also called as learning rate. The pseudo-code of the gradient descent algorithm is given in Algorithm 10.

Algorithm 10 Gradient descent algorithm

—————————————————————————————

Inputs: - multivariate function

- initial value

- precision value

Outputs:

- found local minimum place

- local minimum

—————————————————————————————

1 Initialisation  ,

,

2 while

3

4 Compute gradient

5 Update learning rate by setting

6 Update  as

as

7 end

8 return  and

and

—————————————————————————————

The convergence to local minimum can be guaranteed under certain assumptions on function  and specific choice s of the dependency of learning rate

and specific choice s of the dependency of learning rate  on

on  ,

,  ,

,  and

and  . If function

. If function  is convex, then local minimum is also the global minimum.

is convex, then local minimum is also the global minimum.

The extension of gradient descent, the stochastic gradient descent algorithm and its improved variants are the most commonly used numeric optimization algorithms for training deep neural networks.

Further special cases of gradient methods

Further special cases of gradient methods

Several further algorithm can be obtained as special cases of gradient methods by setting vector  specially. These includes

specially. These includes

- Diagonal scaled gradient descent and

- Newton’s method.

The diagonal scaled gradient descent algorithm is obtained by setting

, where

, where  is a diagonal matrix. Note that just like vector

is a diagonal matrix. Note that just like vector  , matrix

, matrix  can also depend on the iteration step.

can also depend on the iteration step.

The Newton’s method, also called as Newton–Raphson method applied to optimization can be also obtained by setting  with

with  , where

, where  is the Hessian matrix. Since the Hessian matrix includes second order derivatives of function

is the Hessian matrix. Since the Hessian matrix includes second order derivatives of function  , Newton’s method is a second order optimization method. For the class of convex functions the Newton’s method converges to the minimum quadratically fast.

, Newton’s method is a second order optimization method. For the class of convex functions the Newton’s method converges to the minimum quadratically fast.

Second-order algorithms

The second-order algorithms use the second order information in their original formulation from the Hessian matrix. The following second-order methods are considered

- Newton’s method

- Quasi-Newton methods

- Non-linear conjugate gradient algorithm

Newton methods

Newton methods

Newton’s method for local optimization is based on the second order Taylor-expansion of the function to be optimized. We apply it to minimize the function  . The second order Taylor-expansion of

. The second order Taylor-expansion of  around

around  is given as

is given as

The method chooses the next value of the parameter vector

as the minimum of the above Taylor-expansion form. It is well known that the necessary condition of

being the minimum is that the gradient of

must be

. Computing the gradient of

with respect to

and setting it to

gives

from which the next value of the parameter vector

can be expressed by setting

as

which is the same formula as we got by setting

in the general iteration relation of the gradient methods. This recursive computational rule utilizes also the second order information in Hessian matrix. If the function to be minimized is locally quadratic then the method jumps in one step to the minimum. Otherwise it iterates in quadratic steps and hence faster than the gradient descent. There are two major issues with the Newton’s method as

- Limitation - The convergence of the method is ensured only for locally convex regions. It can move in wrong direction near a saddle point.

- Drawback - The numerical complexity of the method is

, that is higher than that of the first-order methods. Here

, that is higher than that of the first-order methods. Here  is the number of parameters, i.e. the size of vector

is the number of parameters, i.e. the size of vector  . This is due to the computation of the inverse Hessian in each iteration step.

. This is due to the computation of the inverse Hessian in each iteration step.

Quasi-Newton methods

Quasi-Newton methods

In many applications, like e.g. in machine learning and in deep learning trainings, the the number of parameters,  is usually in magnitude of thousands and millions. Therefore Newton’s method can not be applied directly due to its numerical complexity in such applications.

is usually in magnitude of thousands and millions. Therefore Newton’s method can not be applied directly due to its numerical complexity in such applications.

Instead the quasi-Newton methods can be applied, in which the Hessian matrix is approximated by the matrix  , which needs much less computation effort. The matrix

, which needs much less computation effort. The matrix  is chosen to satisfy the so called secant equation

is chosen to satisfy the so called secant equation

This leads to a significant reduction in the computational complexity.

The most prominent quasi-Newton method is the

Broyden–Fletcher–Goldfarb–Shanno (BFGS) algorithm. It uses the matrix  only for determining the search direction, but it does not apply the quadratic step size like in the original Newton’s method. Instead a line search is performed. If the function is not convex then the step size is determined by finding a point satisfying the Wolfe conditions. The numerical complexity of the BFGS algorithm is

only for determining the search direction, but it does not apply the quadratic step size like in the original Newton’s method. Instead a line search is performed. If the function is not convex then the step size is determined by finding a point satisfying the Wolfe conditions. The numerical complexity of the BFGS algorithm is  . However the algorithm stores the matrix

. However the algorithm stores the matrix  in each iteration step leading to the memory need

in each iteration step leading to the memory need  . This makes it not appropriate for using in DL.

. This makes it not appropriate for using in DL.

A further improvement of BFGS is the Limited Memory BFGS (L-BFGS), whose memory need is  to

to  depending on the amount of information used from previous iteration at updating matrix

depending on the amount of information used from previous iteration at updating matrix  . The method converges faster in locally convex environment than in a non-convex one.

. The method converges faster in locally convex environment than in a non-convex one.

Non-linear conjugate gradient algorithm

Non-linear conjugate gradient algorithm

One potential problem of gradient descent algorithm, that in narrow valley it takes a zig-zag route, which slows the convergence. This is because the consecutive gradients are orthogonal and therefore some part of progress already achieved in the previous gradient direction will be undo.

The conjugate gradient algorithm overcomes this problem by selecting the consecutive directions being so called conjugate gradient directions. In case of N-dimensional quadratic function it reaches the minimum exactly after  steps, since it does not undo progress made in previous directions. The next direction is determined as the linear combination of the actual gradient and the actual direction, in which the previous direction is weighted by

steps, since it does not undo progress made in previous directions. The next direction is determined as the linear combination of the actual gradient and the actual direction, in which the previous direction is weighted by  . Since the next and actual directions are conjugate gradients, the next direction can be determined on straightforward way by the help of the eigenvectors of the Hessian of the multivariate function to be minimized. The conjugate gradient algorithm was intended to solve a quadratic minimization problem, which is equivalent with solving a system of linear equations.

. Since the next and actual directions are conjugate gradients, the next direction can be determined on straightforward way by the help of the eigenvectors of the Hessian of the multivariate function to be minimized. The conjugate gradient algorithm was intended to solve a quadratic minimization problem, which is equivalent with solving a system of linear equations.

The non-linear conjugate gradient algorithm is a generalization of the conjugate gradient algorithm to find a minimum of any non-linear multivariate function. In this case the algorithm does not stop after  steps, so a small modification is required in order to restart search eventually in the direction of unaltered gradient. This is performed by periodic reset of

steps, so a small modification is required in order to restart search eventually in the direction of unaltered gradient. This is performed by periodic reset of  to

to  . In case of large

. In case of large  , which is the case of e.g. in training deep learning models, the computation of the Hessian matrix requires a high computational effort. Therefore computationally more efficient ways were proposed to compute parameter

, which is the case of e.g. in training deep learning models, the computation of the Hessian matrix requires a high computational effort. Therefore computationally more efficient ways were proposed to compute parameter  . Two of the best known formulas for computing

. Two of the best known formulas for computing  are given as follows:

are given as follows:

- Fletcher-Reeves formula:

- Polak-Ribiere formula:

The pseudo-code of the algorithm is given in Algorithm 11.

Algorithm 11 Non-linear conjugate gradient algorithm

—————————————————————————————

Inputs: - multivariate function

- initial value

- precision value

Outputs:

- found local minimum place

- local minimum

—————————————————————————————

1 Initialisation  ,

,

2 Initialize conjugate direction

3 while

4

5 Compute gradient

6 Update conjugate direction

7 Perform line search

8 Update  as

as

9 end

10 return  and

and

—————————————————————————————

The Quasi-Newton methods converge potentially much faster than the non-linear conjugate gradient algorithm.

![{\textstyle [\ ]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd5b89a327df19fafa2e0a27ef0887d769ed5306)

![{\displaystyle {\bf {A}}=[\ a_{ij}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/59d5a09497c72e293bdef864a49c188dc7850c6c)

![{\displaystyle {\bf {I}}=[\ z_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/b7b8a83ccc737859e53e4f93122ffc4809d98401)

![{\textstyle {\bf {A}}=[\ a_{ij}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/d5b21c5e2d0f6665843c07a9a31ad40d75d2706a)

![{\displaystyle \ =[\ b_{i}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/24c1427c1ec7c4723e0e65d447d0b568448e86e4)

![{\textstyle [\ b_{i}]\ }](https://wikimedia.org/api/rest_v1/media/math/render/svg/cd0e5fcaa20f7c5ff5117682f1836d0300a26145)

![{\displaystyle \sum _{j=1}^{n}[\ a_{ij}]\ x_{j}=[\ b_{i}]\,}](https://wikimedia.org/api/rest_v1/media/math/render/svg/cba9bcf9f9c99bb3ece189adb0dd05bdcbac3d28)